📋 Model Description

pipeline_tag: image-text-to-text datasets:

- openbmb/RLAIF-V-Dataset

- multilingual

- minicpm-v

- vision

- ocr

- multi-image

- video

- custom_code

A GPT-4o Level MLLM for Single Image, Multi Image and High-FPS Video Understanding on Your Phone

GitHub | CookBook | Technical Report | Demo

MiniCPM-V 4.5

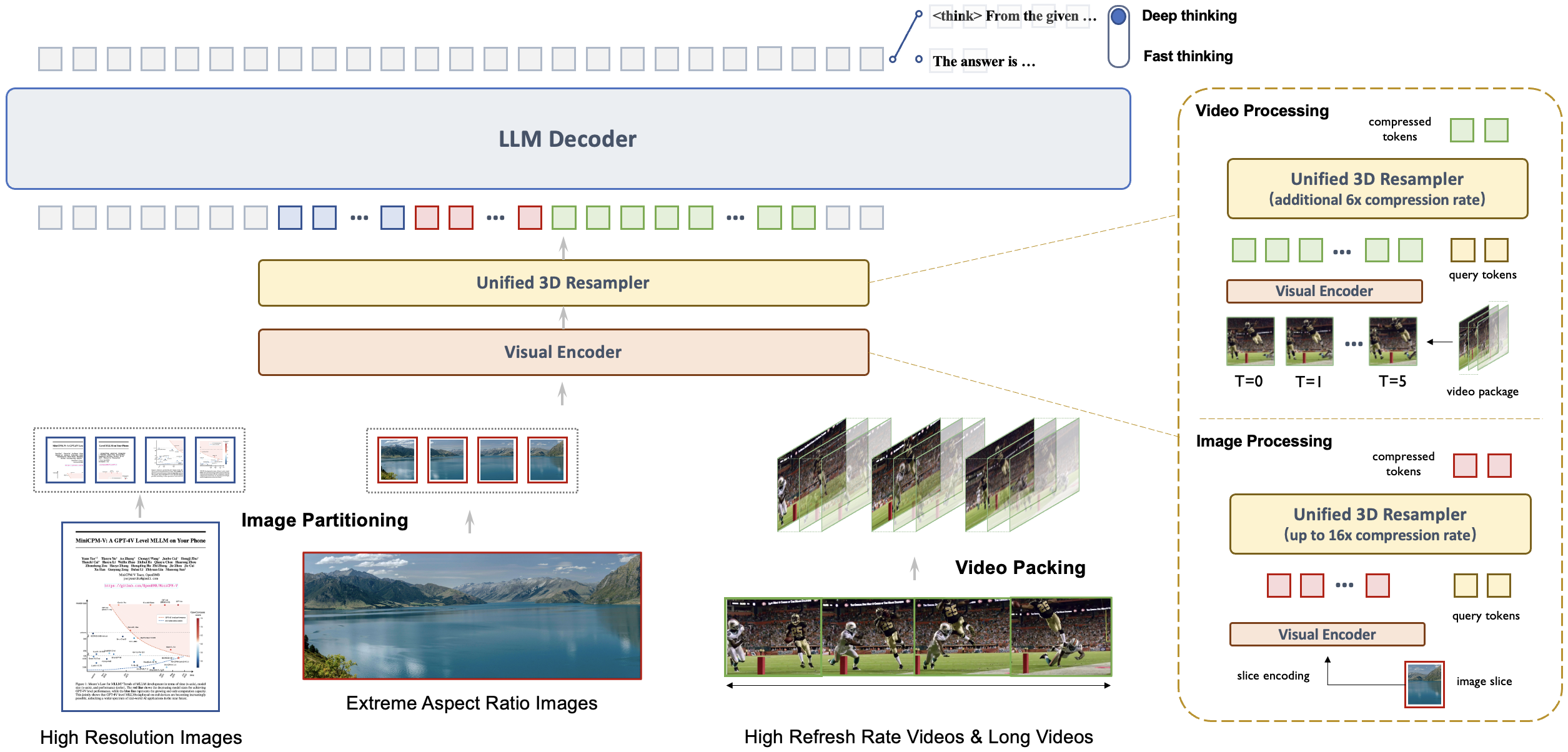

MiniCPM-V 4.5 is the latest and most capable model in the MiniCPM-V series. The model is built on Qwen3-8B and SigLIP2-400M with a total of 8B parameters. It exhibits a significant performance improvement over previous MiniCPM-V and MiniCPM-o models, and introduces new useful features. Notable features of MiniCPM-V 4.5 include:

- 🔥 State-of-the-art Vision-Language Capability.

- 🎬 Efficient High-FPS and Long Video Understanding. Powered by a new unified 3D-Resampler over images and videos, MiniCPM-V 4.5 can now achieve 96x compression rate for video tokens, where 6 448x448 video frames can be jointly compressed into 64 video tokens (normally 1,536 tokens for most MLLMs). This means that the model can perceive significantly more video frames without increasing the LLM inference cost. This brings state-of-the-art high-FPS (up to 10FPS) video understanding and long video understanding capabilities on Video-MME, LVBench, MLVU, MotionBench, FavorBench, etc., efficiently.

- ⚙️ Controllable Hybrid Fast/Deep Thinking. MiniCPM-V 4.5 supports both fast thinking for efficient frequent usage with competitive performance, and deep thinking for more complex problem solving. To cover efficiency and performance trade-offs in different user scenarios, this fast/deep thinking mode can be switched in a highly controlled fashion.

- 💪 Strong OCR, Document Parsing and Others.

- 💫 Easy Usage.

Key Techniques

- Architechture: Unified 3D-Resampler for High-density Video Compression. MiniCPM-V 4.5 introduces a 3D-Resampler that overcomes the performance-efficiency trade-off in video understanding. By grouping and jointly compressing up to 6 consecutive video frames into just 64 tokens (the same token count used for a single image in MiniCPM-V series), MiniCPM-V 4.5 achieves a 96× compression rate for video tokens. This allows the model to process more video frames without additional LLM computational cost, enabling high-FPS video and long video understanding. The architecture supports unified encoding for images, multi-image inputs, and videos, ensuring seamless capability and knowledge transfer.

- Pre-training: Unified Learning for OCR and Knowledge from Documents. Existing MLLMs learn OCR capability and knowledge from documents in isolated training approaches. We observe that the essential difference between these two training approaches is the visibility of the text in images. By dynamically corrupting text regions in documents with varying noise levels and asking the model to reconstruct the text, the model learns to adaptively and properly switch between accurate text recognition (when text is visible) and multimodal context-based knowledge reasoning (when text is heavily obscured). This eliminates reliance on error-prone document parsers in knowledge learning from documents, and prevents hallucinations from over-augmented OCR data, resulting in top-tier OCR and multimodal knowledge performance with minimal engineering overhead.

- Post-training: Hybrid Fast/Deep Thinking with Multimodal RL. MiniCPM-V 4.5 offers a balanced reasoning experience through two switchable modes: fast thinking for efficient daily use and deep thinking for complex tasks. Using a new hybrid reinforcement learning method, the model jointly optimizes both modes, significantly enhancing fast-mode performance without compromising deep-mode capability. Incorporated with RLPR and RLAIF-V, it generalizes robust reasoning skills from broad multimodal data while effectively reducing hallucinations.

Evaluation

Inference Efficiency

OpenCompass

| Model | Size | Avg Score ↑ | Total Inference Time ↓ |

|---|---|---|---|

| GLM-4.1V-9B-Thinking | 10.3B | 76.6 | 17.5h |

| MiMo-VL-7B-RL | 8.3B | 76.4 | 11h |

| MiniCPM-V 4.5 | 8.7B | 77.0 | 7.5h |

Video-MME

| Model | Size | Avg Score ↑ | Total Inference Time ↓ | GPU Mem ↓ |

|---|---|---|---|---|

| Qwen2.5-VL-7B-Instruct | 8.3B | 71.6 | 3h | 60G |

| GLM-4.1V-9B-Thinking | 10.3B | 73.6 | 2.63h | 32G |

| MiniCPM-V 4.5 | 8.7B | 73.5 | 0.26h | 28G |

Both Video-MME and OpenCompass were evaluated using 8×A100 GPUs for inference. The reported inference time of Video-MME includes full model-side computation, and excludes the external cost of video frame extraction (dependent on specific frame extraction tools) for fair comparison.

Examples

We deploy MiniCPM-V 4.5 on iPad M4 with iOS demo. The demo video is the raw screen recording without editing.

Framework Support Matrix

| Category | Framework | Cookbook Link | Upstream PR | Supported since(branch) | Supported since(release) |

|---|---|---|---|---|---|

| Edge(On-device) | Llama.cpp | Llama.cpp Doc | #15575(2025-08-26) | master(2025-08-26) | b6282 |

| Ollama | Ollama Doc | #12078(2025-08-26) | Merging | Waiting for official release | |

| Serving(Cloud) | vLLM | vLLM Doc | #23586(2025-08-26) | main(2025-08-27) | v0.10.2 |

| SGLang | SGLang Doc | #9610(2025-08-26) | Merging | Waiting for official release | |

| Finetuning | LLaMA-Factory | LLaMA-Factory Doc | #9022(2025-08-26) | main(2025-08-26) | Waiting for official release |

| Quantization | GGUF | GGUF Doc | — | — | — |

| BNB | BNB Doc | — | — | — | |

| AWQ | AWQ Doc | — | — | — | |

| Demos | Gradio Demo | Gradio Demo Doc | — | — | — |

Note: If you'd like us to prioritize support for another open-source framework, please let us know via this short form.

Usage

If you wish to enable thinking mode, provide the argument enable_thinking=True to the chat function.

#### Chat with Image

import torch, the thinking mode is enabled.

from PIL import Image

from transformers import AutoModel, AutoTokenizertorch.manual_seed(100)

model = AutoModel.frompretrained('openbmb/MiniCPM-V-45', trustremotecode=True, # or openbmb/MiniCPM-o-2_6

attnimplementation='sdpa', torchdtype=torch.bfloat16) # sdpa or flashattention2, no eager

model = model.eval().cuda()

tokenizer = AutoTokenizer.frompretrained('openbmb/MiniCPM-V-45', trustremotecode=True) # or openbmb/MiniCPM-o-2_6image = Image.open('./assets/minicpmo26/showdemo.jpg').convert('RGB')

enablethinking=False # If

enablethinking=True

stream=True # Ifstream=True, the answer is stringFirst round chat

question = "What is the landform in the picture?" msgs = [{'role': 'user', 'content': [image, question]}]answer = model.chat(

msgs=msgs,

tokenizer=tokenizer,

enablethinking=enablethinking,

stream=True

)generated_text = ""

for new_text in answer:

generatedtext += newtext

print(new_text, flush=True, end='')Second round chat, pass history context of multi-turn conversation

msgs.append({"role": "assistant", "content": [generated_text]}) msgs.append({"role": "user", "content": ["What should I pay attention to when traveling here?"]})answer = model.chat(

msgs=msgs,

tokenizer=tokenizer,

stream=True

)generated_text = ""

for new_text in answer:

generatedtext += newtext

print(new_text, flush=True, end='')

You will get the following output:

# round1

The landform in the picture is karst topography. Karst landscapes are characterized by distinctive, jagged limestone hills or mountains with steep, irregular peaks and deep valleys—exactly what you see here These unique formations result from the dissolution of soluble rocks like limestone over millions of years through water erosion.

This scene closely resembles the famous karst landscape of Guilin and Yangshuo in China’s Guangxi Province. The area features dramatic, pointed limestone peaks rising dramatically above serene rivers and lush green forests, creating a breathtaking and iconic natural beauty that attracts millions of visitors each year for its picturesque views.

round2

When traveling to a karst landscape like this, here are some important tips:

- Wear comfortable shoes: The terrain can be uneven and hilly.

- Bring water and snacks for energy during hikes or boat rides.

- Protect yourself from the sun with sunscreen, hats, and sunglasses—especially since you’ll likely spend time outdoors exploring scenic spots.

- Respect local customs and nature regulations by not littering or disturbing wildlife.

By following these guidelines, you'll have a safe and enjoyable trip while appreciating the stunning natural beauty of places such as Guilin’s karst mountains.

#### Chat with Video

## The 3d-resampler compresses multiple frames into 64 tokens by introducing temporal_ids.

To achieve this, you need to organize your video data into two corresponding sequences:

frames: List[Image]

temporal_ids: List[List[Int]].

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

from decord import VideoReader, cpu # pip install decord

from scipy.spatial import cKDTree

import numpy as np

import math

model = AutoModel.frompretrained('openbmb/MiniCPM-V-45', trustremotecode=True, # or openbmb/MiniCPM-o-2_6

attnimplementation='sdpa', torchdtype=torch.bfloat16) # sdpa or flashattention2, no eager

model = model.eval().cuda()

tokenizer = AutoTokenizer.frompretrained('openbmb/MiniCPM-V-45', trustremotecode=True) # or openbmb/MiniCPM-o-2_6

MAXNUMFRAMES=180 # Indicates the maximum number of frames received after the videos are packed. The actual maximum number of valid frames is MAXNUMFRAMES * MAXNUMPACKING.

MAXNUMPACKING=3 # indicates the maximum packing number of video frames. valid range: 1-6

TIME_SCALE = 0.1

def maptonearest_scale(values, scale):

tree = cKDTree(np.asarray(scale)[:, None])

_, indices = tree.query(np.asarray(values)[:, None])

return np.asarray(scale)[indices]

def group_array(arr, size):

return [arr[i:i+size] for i in range(0, len(arr), size)]

def encodevideo(videopath, choosefps=3, forcepacking=None):

def uniform_sample(l, n):

gap = len(l) / n

idxs = [int(i * gap + gap / 2) for i in range(n)]

return [l[i] for i in idxs]

vr = VideoReader(video_path, ctx=cpu(0))

fps = vr.getavgfps()

video_duration = len(vr) / fps

if choosefps * int(videoduration) <= MAXNUMFRAMES:

packing_nums = 1

chooseframes = round(min(choosefps, round(fps)) * min(MAXNUMFRAMES, video_duration))

else:

packingnums = math.ceil(videoduration * choosefps / MAXNUM_FRAMES)

if packingnums <= MAXNUM_PACKING:

chooseframes = round(videoduration * choose_fps)

else:

chooseframes = round(MAXNUMFRAMES * MAXNUM_PACKING)

packingnums = MAXNUM_PACKING

frame_idx = [i for i in range(0, len(vr))]

frameidx = np.array(uniformsample(frameidx, chooseframes))

if force_packing:

packingnums = min(forcepacking, MAXNUMPACKING)

print(videopath, ' duration:', videoduration)

print(f'get video frames={len(frameidx)}, packingnums={packing_nums}')

frames = vr.getbatch(frameidx).asnumpy()

frameidxts = frame_idx / fps

scale = np.arange(0, videoduration, TIMESCALE)

frametsid = maptonearestscale(frameidxts, scale) / TIMESCALE

frametsid = frametsid.astype(np.int32)

assert len(frames) == len(frametsid)

frames = [Image.fromarray(v.astype('uint8')).convert('RGB') for v in frames]

frametsidgroup = grouparray(frametsid, packing_nums)

return frames, frametsid_group

videopath="videotest.mp4"

fps = 5 # fps for video

forcepacking = None # You can set forcepacking to ensure that 3D packing is forcibly enabled; otherwise, encode_video will dynamically set the packing quantity based on the duration.

frames, frametsidgroup = encodevideo(videopath, fps, forcepacking=force_packing)

question = "Describe the video"

msgs = [

{'role': 'user', 'content': frames + [question]},

]

answer = model.chat(

msgs=msgs,

tokenizer=tokenizer,

useimageid=False,

maxslicenums=1,

temporalids=frametsidgroup

)

print(answer)

#### Chat with multiple images Click to show Python code running MiniCPM-V 4.5 with multiple images input.

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

model = AutoModel.frompretrained('openbmb/MiniCPM-V-45', trustremotecode=True,

attnimplementation='sdpa', torchdtype=torch.bfloat16) # sdpa or flashattention2

model = model.eval().cuda()

tokenizer = AutoTokenizer.frompretrained('openbmb/MiniCPM-V-45', trustremotecode=True)

image1 = Image.open('image1.jpg').convert('RGB')

image2 = Image.open('image2.jpg').convert('RGB')

question = 'Compare image 1 and image 2, tell me about the differences between image 1 and image 2.'

msgs = [{'role': 'user', 'content': [image1, image2, question]}]

answer = model.chat(

msgs=msgs,

tokenizer=tokenizer

)

print(answer)

#### In-context few-shot learning Click to view Python code running MiniCPM-V 4.5 with few-shot input.

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

model = AutoModel.frompretrained('openbmb/MiniCPM-V-45', trustremotecode=True,

attnimplementation='sdpa', torchdtype=torch.bfloat16)

model = model.eval().cuda()

tokenizer = AutoTokenizer.frompretrained('openbmb/MiniCPM-V-45', trustremotecode=True)

question = "production date"

image1 = Image.open('example1.jpg').convert('RGB')

answer1 = "2023.08.04"

image2 = Image.open('example2.jpg').convert('RGB')

answer2 = "2007.04.24"

image_test = Image.open('test.jpg').convert('RGB')

msgs = [

{'role': 'user', 'content': [image1, question]}, {'role': 'assistant', 'content': [answer1]},

{'role': 'user', 'content': [image2, question]}, {'role': 'assistant', 'content': [answer2]},

{'role': 'user', 'content': [image_test, question]}

]

answer = model.chat(

msgs=msgs,

tokenizer=tokenizer

)

print(answer)

License

#### Model License- The MiniCPM-o/V model weights and code are open-sourced under the Apache-2.0 license.

- To help us better understand and support our users, we would deeply appreciate it if you could consider optionally filling out a brief registration "questionnaire".

#### Statement

- As an LMM, MiniCPM-V 4.5 generates contents by learning a large amount of multimodal corpora, but it cannot comprehend, express personal opinions or make value judgement. Anything generated by MiniCPM-V 4.5 does not represent the views and positions of the model developers

- We will not be liable for any problems arising from the use of the MinCPM-V models, including but not limited to data security issues, risk of public opinion, or any risks and problems arising from the misdirection, misuse, dissemination or misuse of the model.

Key Techniques and Other Multimodal Projects

👏 Welcome to explore key techniques of MiniCPM-V 4.5 and other multimodal projects of our team:

VisCPM | RLPR | RLHF-V | LLaVA-UHD | RLAIF-V

Citation

If you find our work helpful, please consider citing our papers 📝 and liking this project ❤️!

@misc{yu2025minicpmv45cookingefficient,

title={MiniCPM-V 4.5: Cooking Efficient MLLMs via Architecture, Data, and Training Recipe},

author={Tianyu Yu and Zefan Wang and Chongyi Wang and Fuwei Huang and Wenshuo Ma and Zhihui He and Tianchi Cai and Weize Chen and Yuxiang Huang and Yuanqian Zhao and Bokai Xu and Junbo Cui and Yingjing Xu and Liqing Ruan and Luoyuan Zhang and Hanyu Liu and Jingkun Tang and Hongyuan Liu and Qining Guo and Wenhao Hu and Bingxiang He and Jie Zhou and Jie Cai and Ji Qi and Zonghao Guo and Chi Chen and Guoyang Zeng and Yuxuan Li and Ganqu Cui and Ning Ding and Xu Han and Yuan Yao and Zhiyuan Liu and Maosong Sun},

year={2025},

eprint={2509.18154},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2509.18154},

}

@article{yao2024minicpm,

title={MiniCPM-V: A GPT-4V Level MLLM on Your Phone},

author={Yao, Yuan and Yu, Tianyu and Zhang, Ao and Wang, Chongyi and Cui, Junbo and Zhu, Hongji and Cai, Tianchi and Li, Haoyu and Zhao, Weilin and He, Zhihui and others},

journal={Nat Commun 16, 5509 (2025)},

year={2025}

}

📂 GGUF File List

| 📁 Filename | 📦 Size | ⚡ Download |

|---|---|---|

|

MiniCPM-V-4_5-F16.gguf

LFS

FP16

|

15.26 GB | Download |

|

MiniCPM-V-4_5-Q4_0.gguf

Recommended

LFS

Q4

|

4.45 GB | Download |

|

MiniCPM-V-4_5-Q4_K_M.gguf

LFS

Q4

|

4.68 GB | Download |

|

MiniCPM-V-4_5-Q5_1.gguf

LFS

Q5

|

5.77 GB | Download |

|

MiniCPM-V-4_5-Q5_K_M.gguf

LFS

Q5

|

5.45 GB | Download |

|

MiniCPM-V-4_5-Q5_K_S.gguf

LFS

Q5

|

5.33 GB | Download |

|

MiniCPM-V-4_5-Q6_K.gguf

LFS

Q6

|

6.26 GB | Download |

|

MiniCPM-V-4_5-Q8_0.gguf

LFS

Q8

|

8.11 GB | Download |

|

Model-8.2B-F16.gguf

LFS

FP16

|

15.26 GB | Download |

|

ggml-model-Q4_0.gguf

LFS

Q4

|

4.45 GB | Download |

|

ggml-model-Q4_1.gguf

LFS

Q4

|

4.89 GB | Download |

|

ggml-model-Q4_K_M.gguf

LFS

Q4

|

4.68 GB | Download |

|

ggml-model-Q4_K_S.gguf

LFS

Q4

|

4.47 GB | Download |

|

ggml-model-Q5_0.gguf

LFS

Q5

|

5.33 GB | Download |

|

ggml-model-Q5_1.gguf

LFS

Q5

|

5.77 GB | Download |

|

ggml-model-Q5_K_M.gguf

LFS

Q5

|

5.45 GB | Download |

|

ggml-model-Q5_K_S.gguf

LFS

Q5

|

5.33 GB | Download |

|

ggml-model-Q6_K.gguf

LFS

Q6

|

6.26 GB | Download |

|

ggml-model-Q8_0.gguf

LFS

Q8

|

8.11 GB | Download |

|

mmproj-model-f16.gguf

LFS

FP16

|

1.02 GB | Download |